The world of public health in the United States has always held a soft sport for data published in the Morbidity and Mortality Weekly Report (MMWR); - the CDC’s lynchpin publication identifying trends and outcomes from various findings. In short, it holds substantial sway over our health policy decisions. Such influence, of course, necessitates a close examination to ascertain its credibility. Our friends and renowned professionals - Dr. Tracy Beth Høeg, Dr. Alyson Haslam, and Dr. Vinay Prasad - carried out a comprehensive analysis of MMWR findings on face masks, presented in their paper, "Characteristics and Quality of Studies from 1978 to 2023."

Their critical examination (now peer-reviewed and published) aims to shed light on how MMWR reports are ordered, designed, and presented, especially concerning mask-related health policies. Methodically, the researchers undertook a retrospective cross-sectional study of MMWR's publications on masks from 1978 to 2023, showcasing the particulars of a given study design and determining whether the study could assess mask effectiveness.

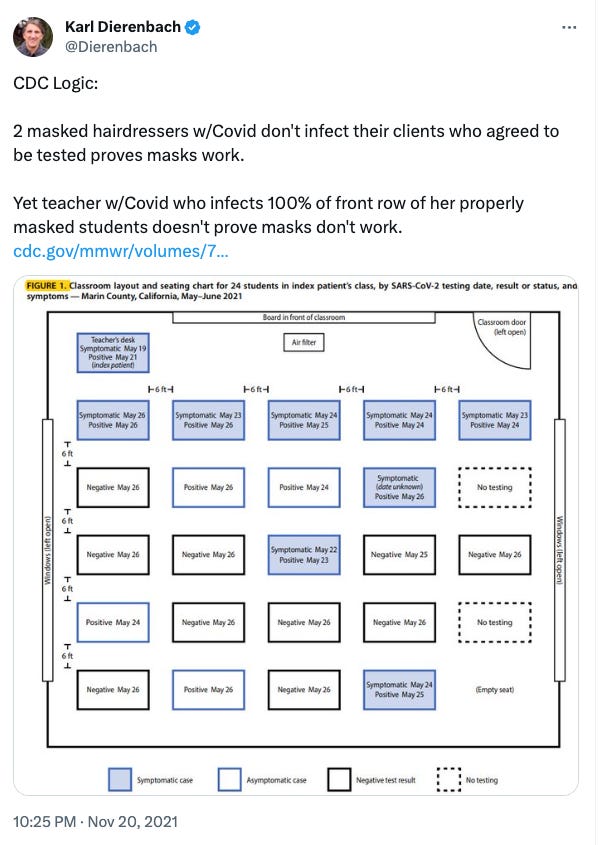

Of course, our team has been calling gout the crazies since day 1:

https://x.com/Dierenbach/status/1462306263795175424?s=20

In Høeg, Haslam, and Prasad's sampling of 77 studies, all published after 2019, they found a glaring contradiction. Despite 75.3% (or 58 out of 77) of MMWR's findings stating that masks were effective, only 29.9% (23 out of 77) assessed mask effectiveness, and a scant 14.3% (11 out of 77) were statistically significant.

Moreover, not a single study in their sampling was randomized, a critical element in ensuring a study's validity.

"Over half [of the studies] drew causal conclusions," the researchers note, pointing out that a mere 1.3% of them used appropriate causal language, and none cited randomized data. Surely, then, such conclusions are as structurally sound as a house built on sand. A staggering 70.7% resorted to using causal language - an egregious misuse of terminology and methodology given the dearth of supporting data.

The gulf between the content and the conclusion of these studies is rather perplexing and, indeed, alarming. The researchers well explicated, "the level of evidence generated was low and the conclusions were most often unsupported by the data," turning the spotlight on the reported finding's credibility.

Høeg, Haslam, and Prasad's scrutiny of MMWR's reports underlines the concerning reliability of the journal in informing health policy in the United States. Their work is a poignant reminder of the need for precision, accuracy, and rigorous scientific method in public health research - attributes that shape and determine the effectiveness of our health policies and ensure credible evidence-based actions.

If I can share an editorial comment/suggestion, it would be this: Substack readers need to become a part of the “10 percent (paid)” … not the current 1 to 4 percent.

Just like our adversaries are tripling down on their lies and cover-ups, our side needs to triple down on our support of the people who scare them the most.

In my latest article, I expound on several key Substack metrics and present one easy “solution” that would help our side more effectively fight - and scare - our adversaries …

Please read and share if you happen to think like I do.

https://billricejr.substack.com/p/fear-probably-explains-why-substacks?utm_source=profile&utm_medium=reader2